Does searching your data feel like you're looking for a needle in a datastack? Trying to uncover key insights and trends buried within millions of unstructured documents is difficult, but what if you had a powerful tool that could swiftly parse and organize all your data? Analyzers could be the solution you're looking for.

In this article, we dive deep into how analyzers extract meaningful insights from your data. By exploring practical examples and key concepts, you'll gain the knowledge and tools to realize the full potential of your text data. With a keen understanding of analyzers, you can gain a competitive edge by surfacing powerful trends and patterns that would otherwise remain hidden in the noise. By the end of this journey, you'll have the skills and confidence to supercharge your analytics and gain a deeper understanding of your data.

Setup

If you would like to apply the concepts and insights from this article in real-time, check out the following post for instructions on setting up your own Elastic environment using Docker.

Before We Begin

If you are new to Elasticsearch, check out this article for an explanation of key Elasticsearch concepts: Nodes & Clusters, Documents, Indices, Mappings, and Shards.

What Are Analyzers?

An analyzer is an essential component of Elasticsearch. Analyzers take raw text and transforms it into a stream of tokens. It does this by breaking down the text into smaller units based on a set rules. The primary purpose of an Analyzer is to make text more relevant and searchable by normalizing and enriching it. Analyzers are used during both the indexing and search phases of Elasticsearch. There are several built-in analyzers by default, but you can also create custom analyzers that can be tailored to for your specific needs.

Defining Analyzers

Analyzers in Elasticsearch can be defined at multiple levels: field, index, and query. However, it's often best to define analyzers at the lowest level and apply them directly to fields.

Field Analyzers are defined in the index mapping, which is the configuration that specifies how data should be stored in that index. More specifically, an analyzer can be defined on each field within the mapping. If an analyzer is not specified, the default analyzer for the field type is implicitly used.

Let's look an example of an analyzer defined in a mapping:

PUT /customers

{

"mappings": {

"properties": {

"name": {

"type": "text",

"analyzer": "whitespace"

},

"comments": {"type": "text"}

}

}

}Example of Analyzer Applied in Name Field

In this example, we are explicitly specifying that the name field should use the "whitespace" analyzer. The comments field does not explicitly specify an analyzer, but this field will implicitly use the "standard" analyzer as that is the default for text fields.

When Are Analyzers Used?

When text is indexed in Elasticsearch, it passes through the analyzer defined for its field in the mapping. The analyzer breaks the text into tokens and applies various processing based on the analyzer's configuration. These tokens are then stored in the inverted index for that field. Elasticsearch uses the inverted index to search for documents that match the query terms entered by the user.

When a search query is executed, the query text is processed by the same analyzer used during indexing. This means that the search query is broken down into the same tokens, allowing Elasticsearch to match the query against the indexed data.

Despite the critical role analyzers play in text processing for both indexing and searching, many people using Elasticsearch are unaware of their existence. This is because Elasticsearch automatically handles most of the details regarding analyzers, making it transparant to users. Additionally, analyzers are not often explicitly specified in Mapping configurations since the defaults work well for most scenarios, which further obfuscats the existence of analyzers. Let's take a more detailed look at what analyzers exist in Elasticsearch.

Types of Analyzers

There are various types of built-in analyzers that ship with Elasticsearch. Some analyzers may be more useful then others depending on how you need to process your data. For a full listing, you can refer to the Elastic docs on built-in analyzers, but we will highlight some below:

- Standard Analyzer: It is the default analyzer in Elasticsearch. It tokenizes text into words based on the Unicode Text Segmentation algorithm.

- Keyword Analyzer: It does not tokenize the text and indexes the entire text as a single term. It is useful for exact matches.

- Language Analyzers: Elasticsearch provides a set of language-specific analyzers that are designed to handle language-specific features such as stemming, stop words, and synonyms. Examples include English Analyzer, French Analyzer, and German Analyzer.

- Fingerprint Analyzer: It generates a fingerprint of the input text by removing whitespace, converting to lowercase, and sorting the terms. It is useful for detecting duplicate documents.

- Path Hierarchy Analyzer: It tokenizes a hierarchical path into separate terms, useful for indexing and searching file paths.

If the built-in analyzers do not meet your needs, you can create your own analyzer. However, before we begin to think about creating a custom analyzer, we must first learn about the structure of analyzers and how they work.

Analyzer Structure

Elasticsearch analyzers are comprised of three fundamental parts that work together to process text: a character filter, a tokenizer, and a token filter. It's worth noting that not all of these components are required. For example, the Standard Analyzer uses just a tokenizer and a token filter, it does not use a character filter.

Character Filter

The Character Filter is responsible for modifying the text before it is tokenized. These filters can be used to strip HTML characters, replace characters with alternatives, stem words, and more.

Tokenizer

The Tokenizer splits the text in tokens based on a set of rules. The rules can be as simple as splitting on whitespace to more advanced tokenizers that specialize in emails or file paths.

Token Filter

The Token Filter modifies the individual tokens generated by the Tokenizer. A common filter is "Lowercase", which will lowercase each token and enables queries to be case-insensitive.

Analyzer Example

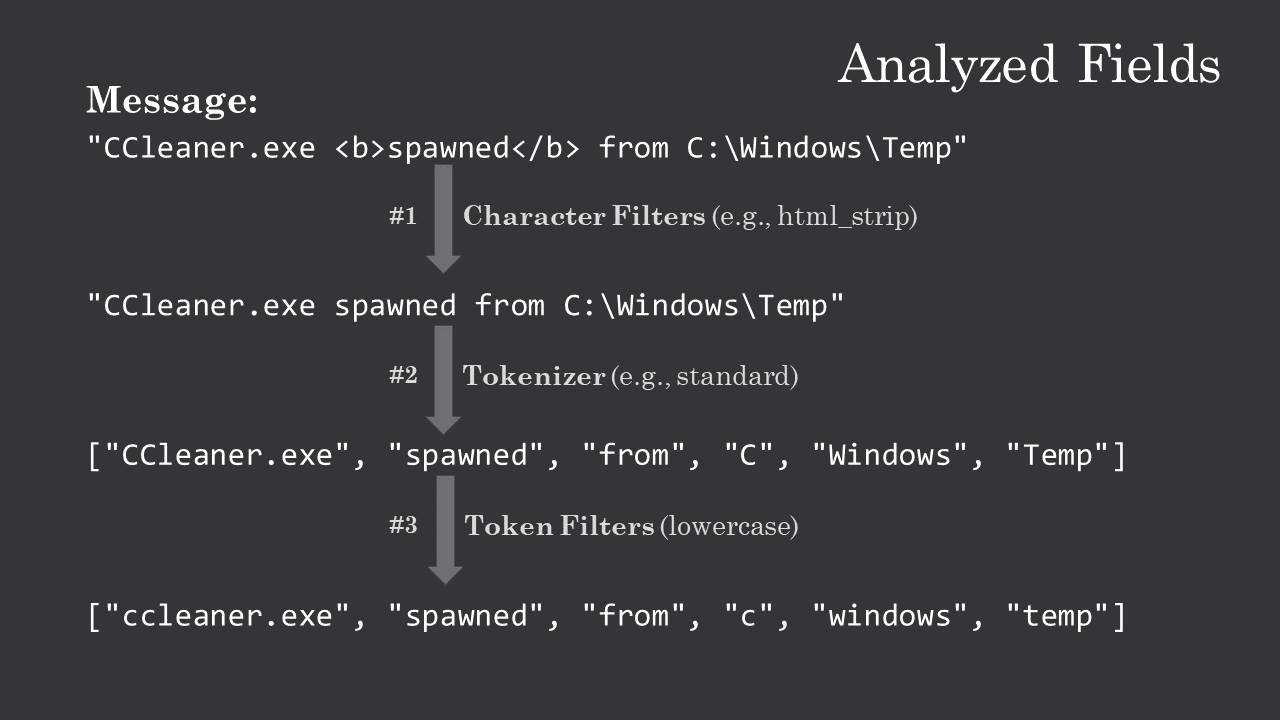

Let's look at an example of text passing through each component of an Analyzer.

- In the first step of the analyzer, the text runs through Character Filters. In this example, we are using the

html_stripfilter, which will remove html syntax from the string. - In step two, the modified text is now run through the Tokenizer. The Standard Tokenizer (which is used by the Standard Analyzer) will split on word boundaries defined by the Unicode Text Segmentation algorithm.

- In the third step, each individual token is run through Token Filters. In this example the lowercase filter is applied, which will lowercase each token.

The resulting tokens and their additional data will be stored in the Inverted Index for the "message" field of this document.

Tokens

Each token generated by the analyzer is not stored as just a simple string of characters; it also contains additional metadata such as the token's position, type, and character offsets.

Position

The position indicates the order in which the token appears in the original text. This proximity value is used by certain phrase and word queries to determine if the text meets specific search criteria.

Type

The type identifiers are set by the tokenizer. It is a classification of each token, such as alphanumeric or number. The type identifier can be used for futher processing or filtering. For example, you could have the analyzer only keep tokens identified as numbers.

Offsets

The character offsets define the exact position of the token within the original text, which is used for highlighting search results. When viewing a document in Kibana, the value displayed for a Text field is the original, unanalyzed text. These offsets take into account characters or words that may have been dropped during analysis, and allows Elasticsearch to correctly highlight the characters in the original text for the user.

Testing Out Analyzers

We can use Elastic's Analyze API to manually perform analysis on text. This is a useful tool because we can view how different analyzers will process, tokenize, and store our text data. Additionally, we can verify how queries will be analyzed, which can allow us to debug our searches.

To use this API, we can paste the following code into the Dev Tools Console.

Request

GET _analyze

{

"analyzer": "standard",

"text": "CCleaner.exe spawned from C:\\Windows\\Temp"

}Using the Elastic Analyze API

Response

{

"tokens": [

{

"token": "ccleaner.exe",

"start_offset": 0,

"end_offset": 12,

"type": "<ALPHANUM>",

"position": 0

},

{

"token": "spawned",

"start_offset": 13,

"end_offset": 20,

"type": "<ALPHANUM>",

"position": 1

},

{

"token": "from",

"start_offset": 21,

"end_offset": 25,

"type": "<ALPHANUM>",

"position": 2

},

{

"token": "c",

"start_offset": 26,

"end_offset": 27,

"type": "<ALPHANUM>",

"position": 3

},

{

"token": "windows",

"start_offset": 29,

"end_offset": 36,

"type": "<ALPHANUM>",

"position": 4

},

{

"token": "temp",

"start_offset": 37,

"end_offset": 41,

"type": "<ALPHANUM>",

"position": 5

}

]

}Result of the Analyzer API

Looking at these results, we can view all the metadata that we discussed before.

When Analyzers Go Rogue

While text analysis is an essential part of the search process, it can also be a source of unexpected and unintended search results. Analyzers will always follow the rules applied to them, but like anything involving computers, it can feel as if analyzers "go rogue" and return search results that are irrelevant, misleading, or downright bizarre.

Check out the following article to explore some of the various ways analyzers can negatively impact search results and how to prevent or correct these issues.

Creating a Custom Analyzer

For information on creating a custom analyzer, check out this article:

Conclusion

Well, we've made it to the end of our journey through the world of Elasticsearch analyzers! It may have been a bit of a technical ride, but I hope you've come away with a better understanding of what analyzers are and how they work. I encourage you to continue exploring the many ways in which Elasticsearch can help you make sense of your information.

The next time you find that needle in the datastack, take a moment to thank your friendly neighborhood analyzer for helping you find it. Thanks for reading!